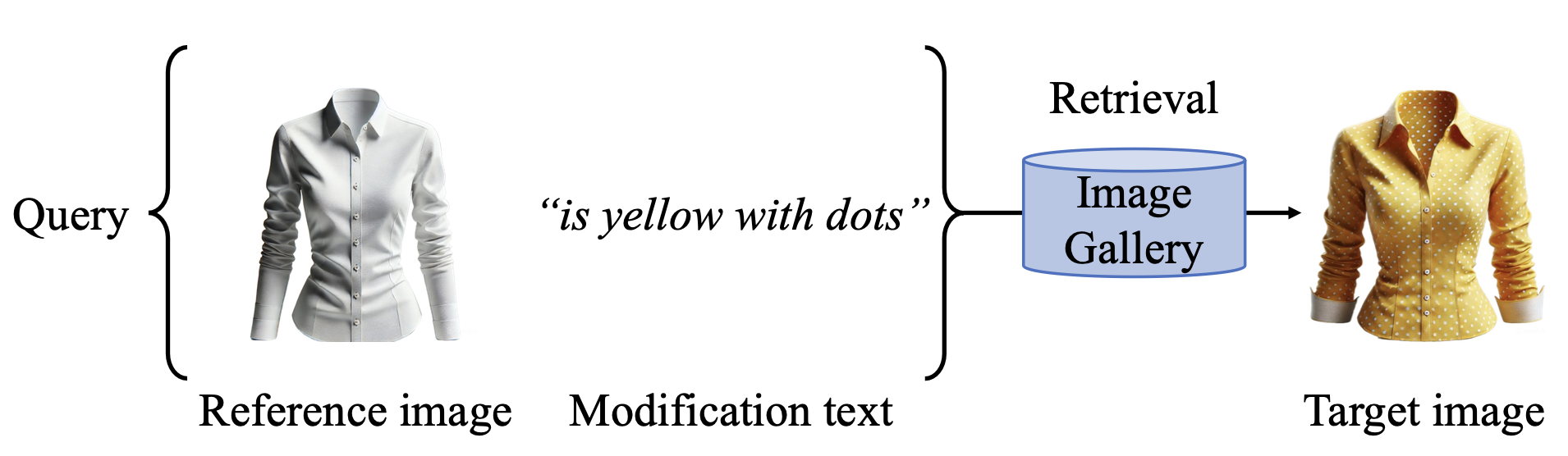

Composed Image Retrieval (CIR) is a complex task that aims to retrieve images based on a multimodal query. Typical training data consists of triplets containing a reference image, a textual description of desired modifications, and the target image, which are expensive and time-consuming to acquire. The scarcity of CIR datasets has led to zero-shot approaches utilizing synthetic triplets or leveraging vision-language models (VLMs) with ubiquitous web-crawled image-caption pairs. However, these methods have significant limitations: synthetic triplets suffer from limited scale, lack of diversity, and unnatural modification text, while image-caption pairs hinder joint embedding learning of the multimodal query due to the absence of triplet data Moreover, existing approaches struggle with complex and nuanced modification texts that demand sophisticated fusion and understanding of vision and language modalities. We present CoLLM, a one-stop framework that effectively addresses these limitations. Our approach generates triplets on-the-fly from image-caption pairs, enabling supervised training without manual annotation. We leverage Large Language Models (LLMs) to generate joint embeddings of reference images and modification texts, facilitating deeper multimodal fusion. Additionally, we introduce Multi-Text CIR (MTCIR), a large-scale dataset comprising 3.4M samples, and refine existing CIR benchmarks (CIRR and Fashion-IQ) to enhance evaluation reliability. Experimental results demonstrate that CoLLM achieves state-of-the-art performance across multiple CIR benchmarks and settings. MTCIR yields competitive results, with up to 15% performance improvement. Our refined benchmarks provide more reliable evaluation metrics for CIR models, contributing to the advancement of this important field.

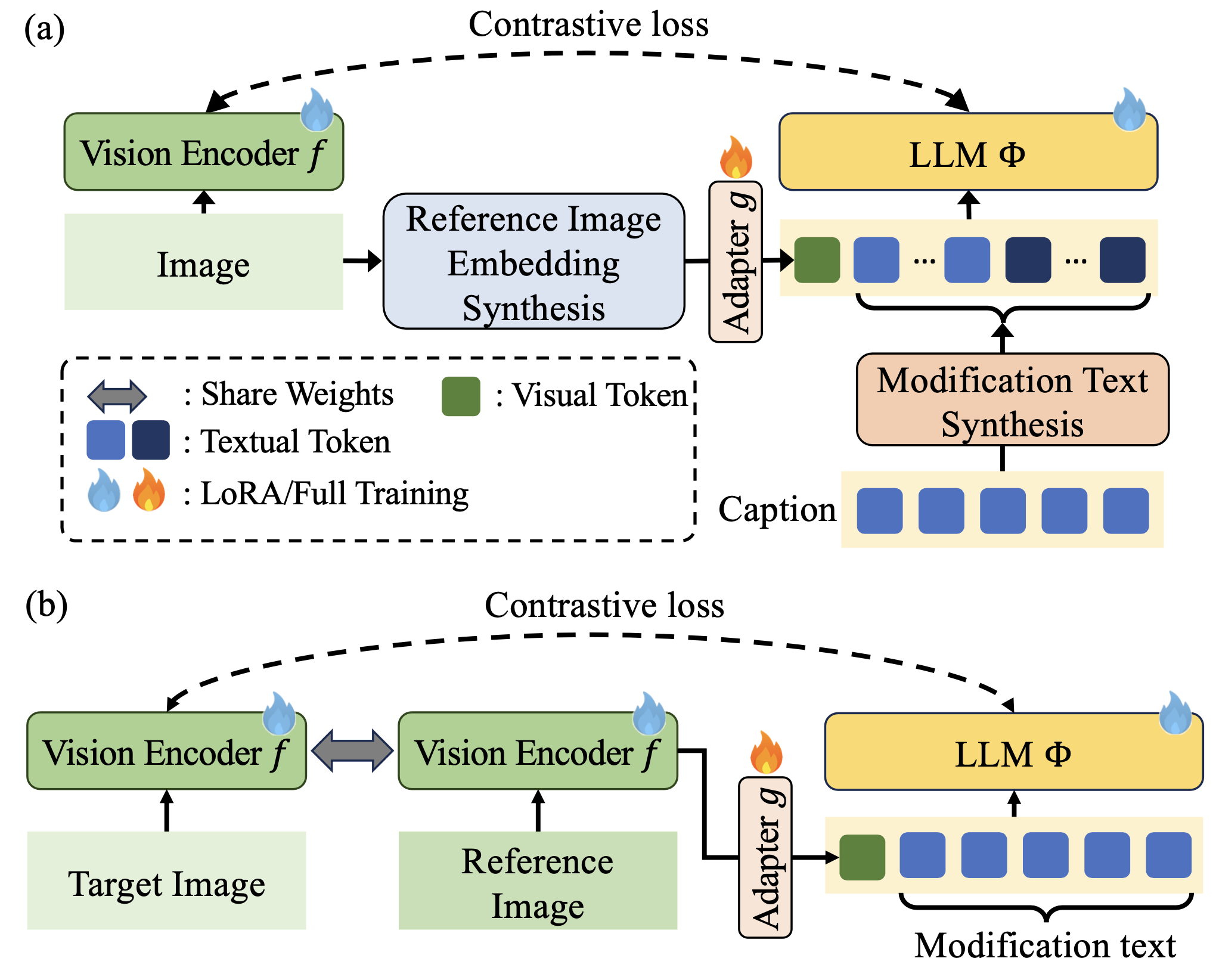

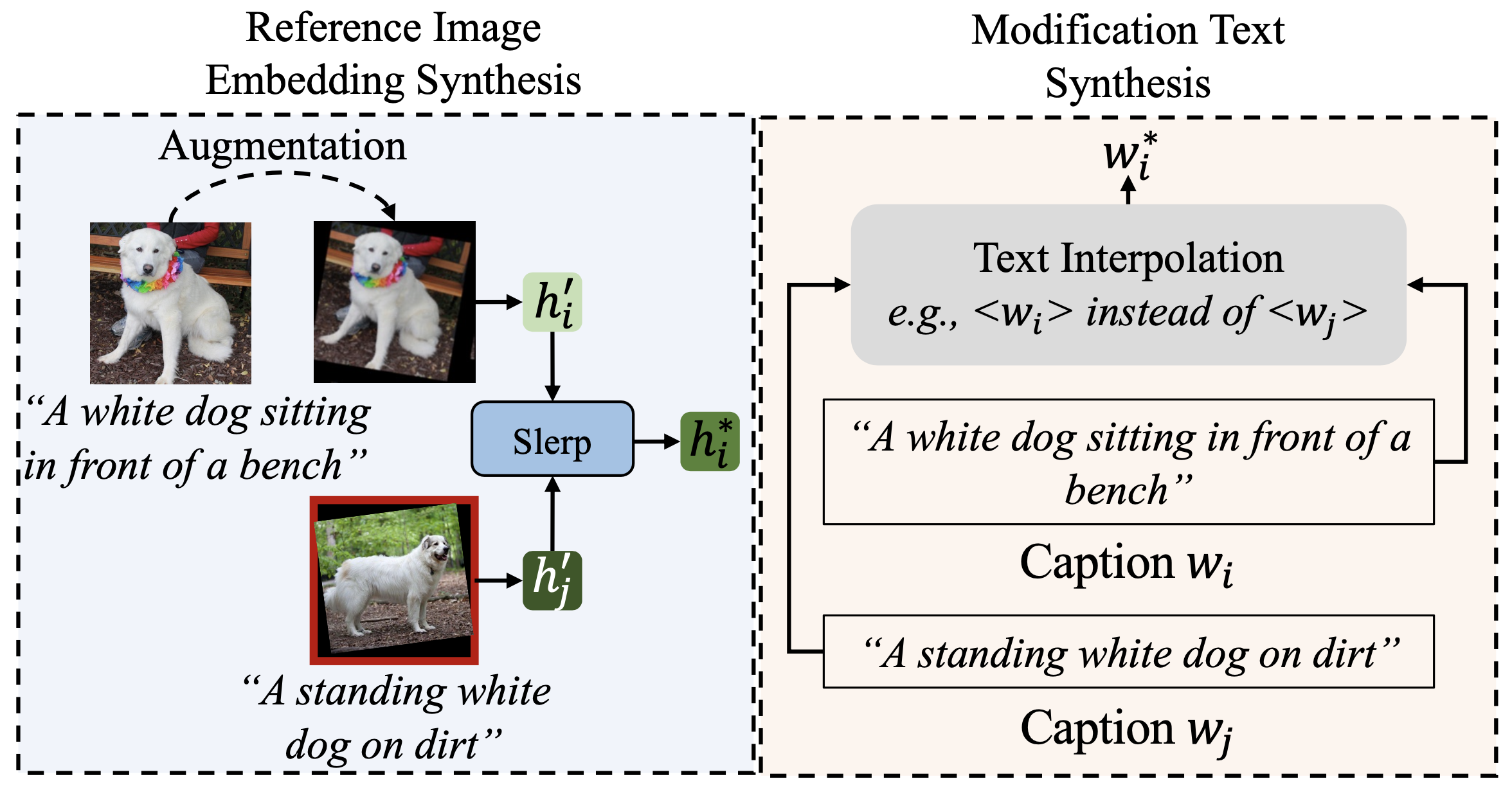

Our model is flexibly trained with (a) image-caption pairs and (b) Composed image retrieval triplets. Image and text in the training query are synthesized by interpolated between similar in-batch image-caption pairs.

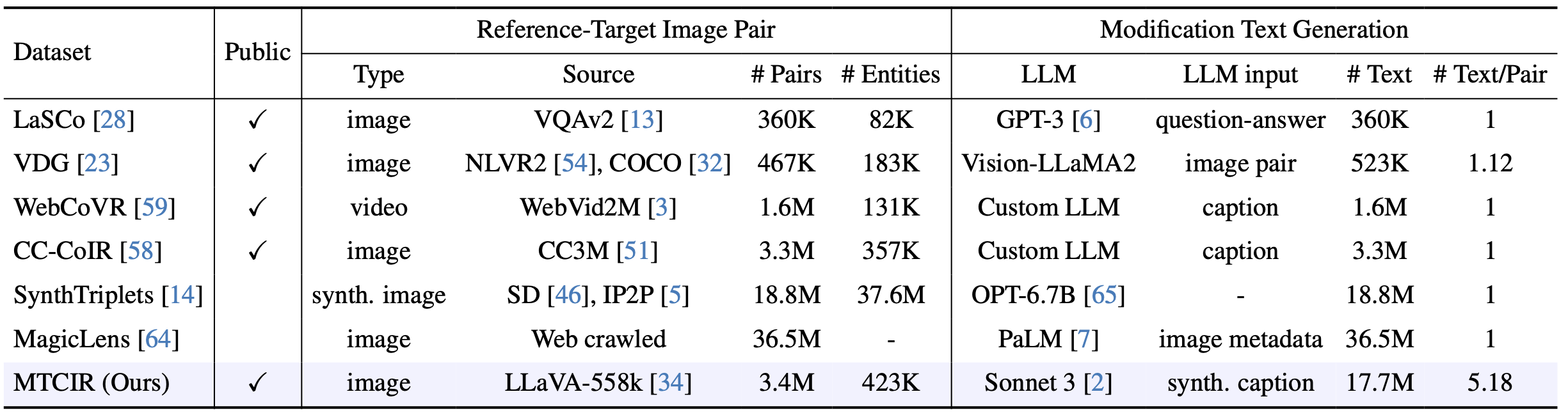

We create the largest public dataset with diverse samples: 3.4M triplets and 17.7M human-aligned modification texts generated by Claude 3 Sonnet.

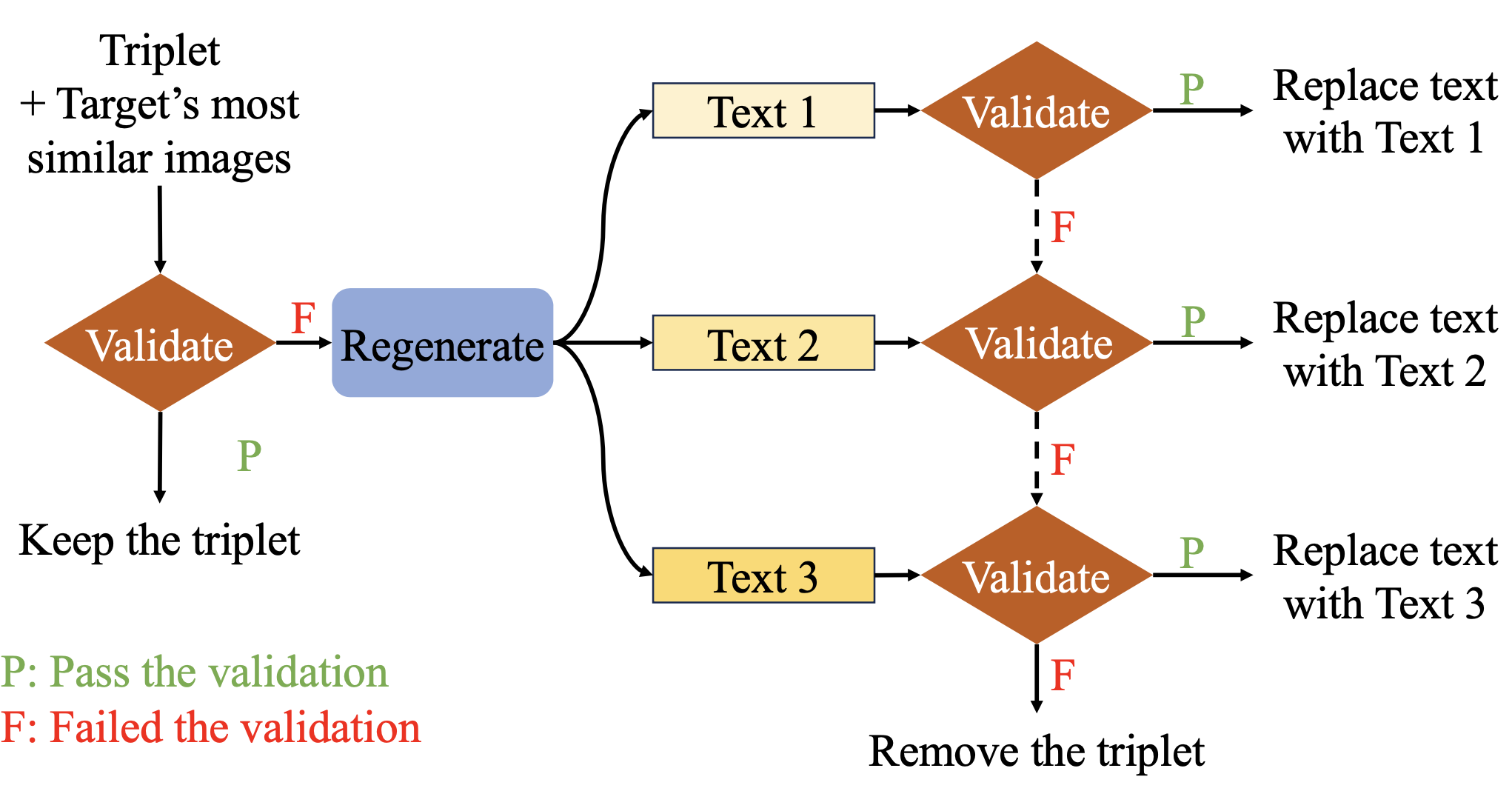

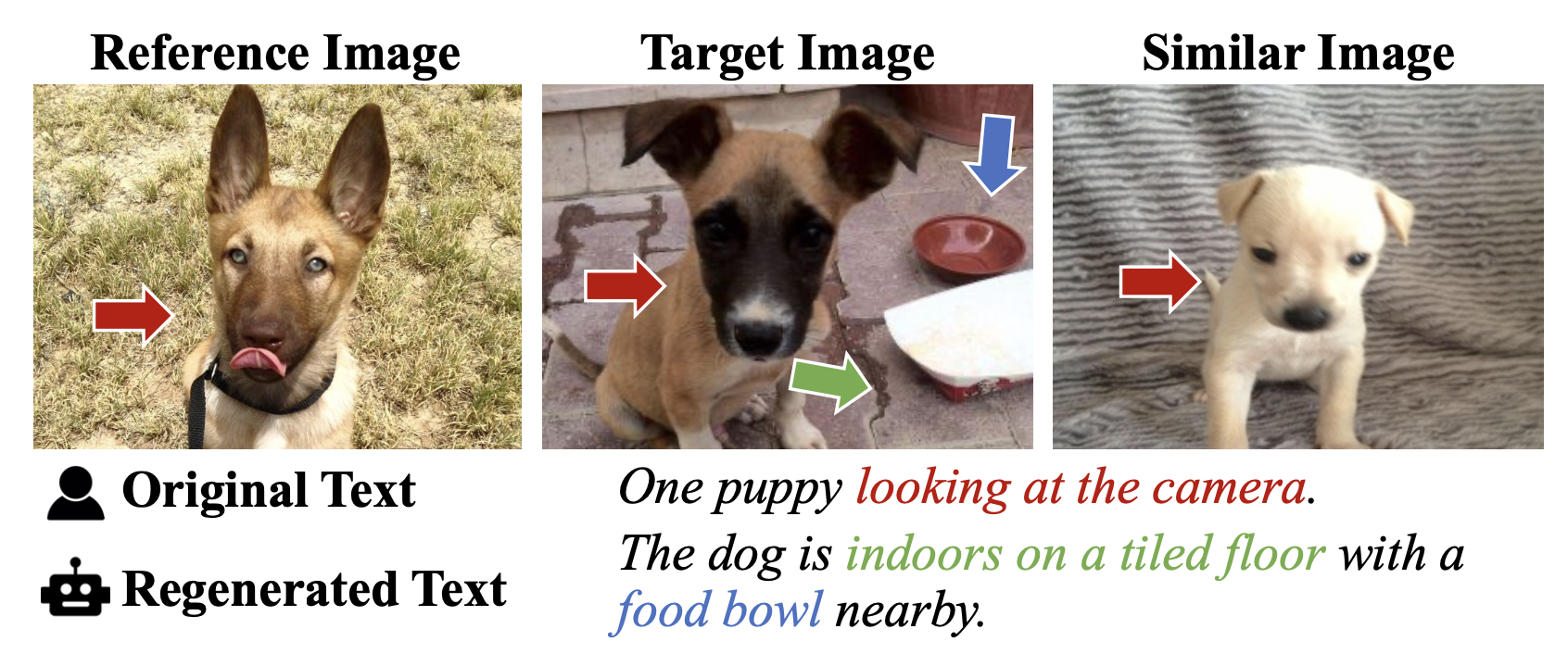

We enhance refined benchmarks by rewrite modification texts of ambiguous samples with Claude 3 Sonnet. The comprehensive pipeline is shown on the left, and an refined example is shown on the right:

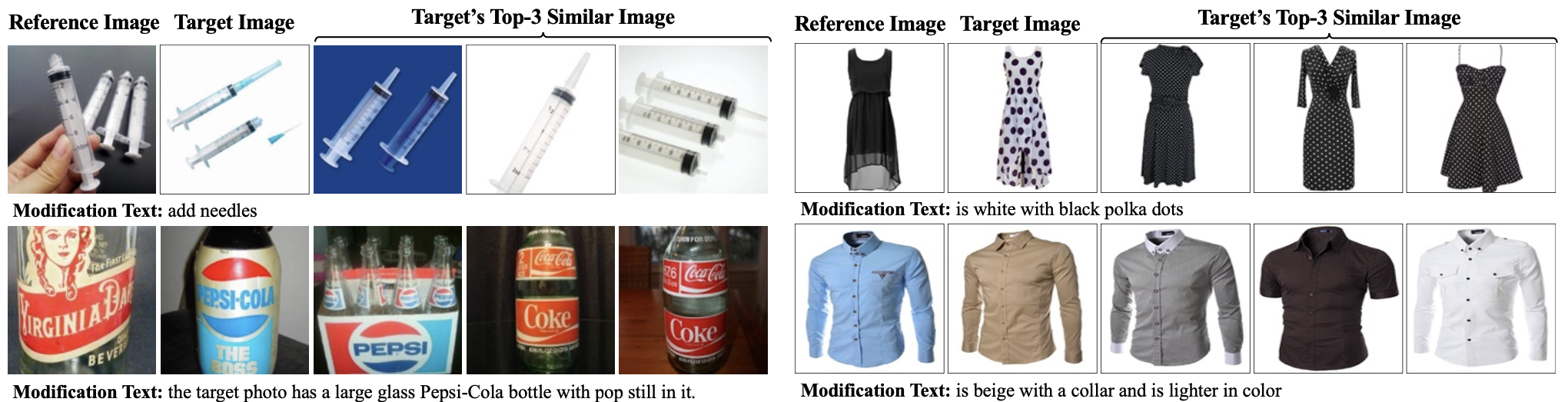

Examples of "good" samples kept in the refined benchmarks: CIRR (left) and Fashion-IQ (right)

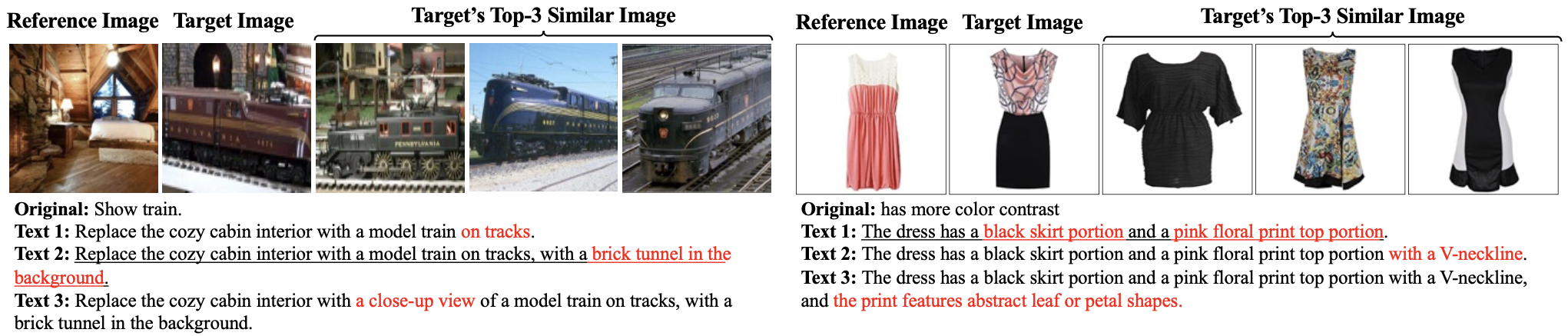

Examples of "bad" samples and regenerated texts in the refined benchmarks: CIRR (left) and Fashion-IQ (right)

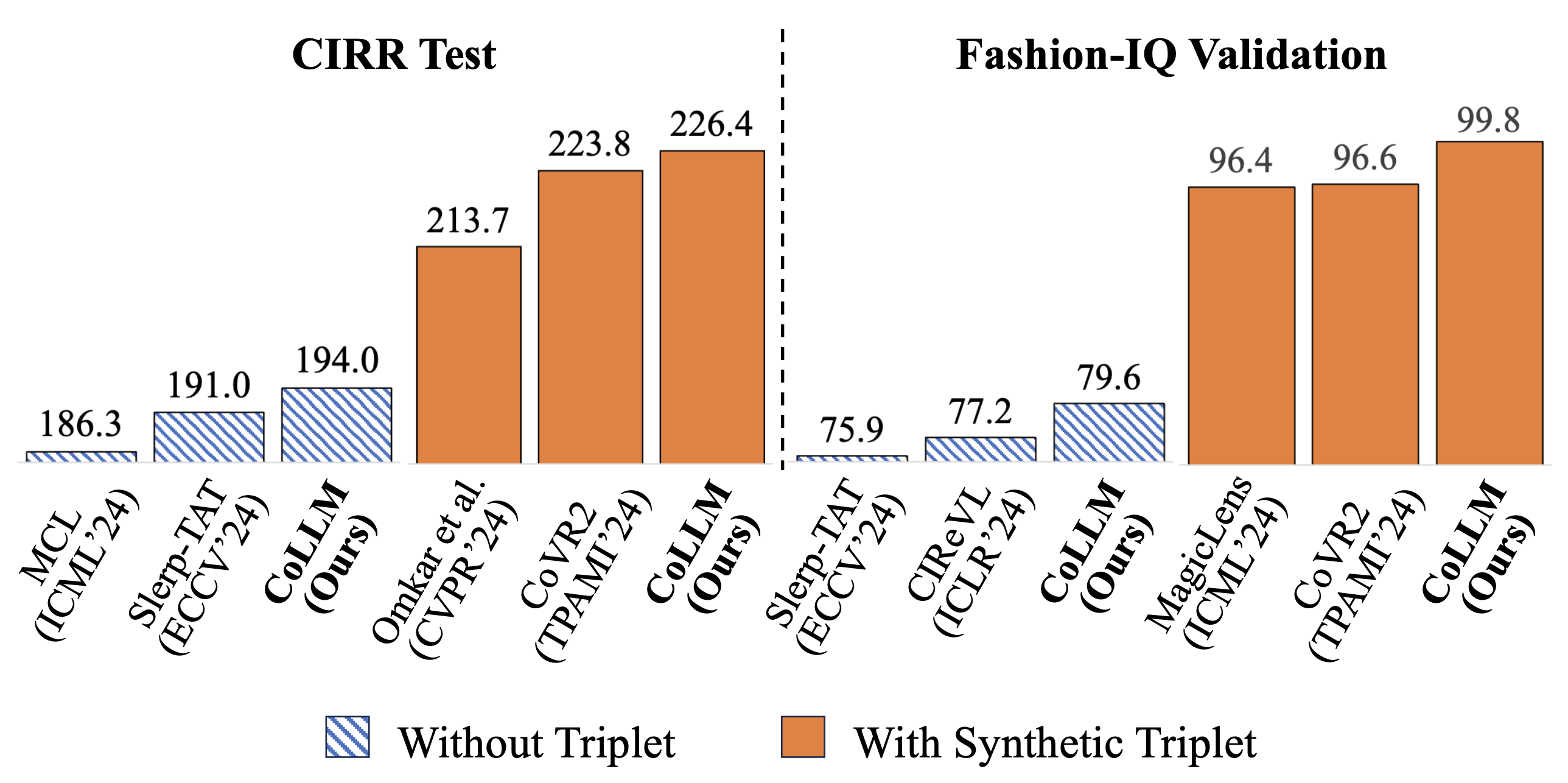

Our framework achieves the highest Recall Sum at {1,10,50} for CIRR and {10, 50} for Fashion-IQ with two training scenarios: (i) without triplet data and (ii) with synthetic triplet data (our MTCIR).

Our model shows better composed query understanding in both CIRR and Fashion-IQ benchmarks, even without training on triplets:

The new refined text helps resolving the ambiguity in the benchmarks, the model can now retrieve the correct target image:

@InProceedings{huynh2025collm,

author = {Huynh, Chuong and Yang, Jinyu and Tawari, Ashish and Shah, Mubarak and Tran, Son and Hamid, Raffay and Chilimbi, Trishul and Shrivastava, Abhinav},

title = {CoLLM: A Large Language Model for Composed Image Retrieval},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}